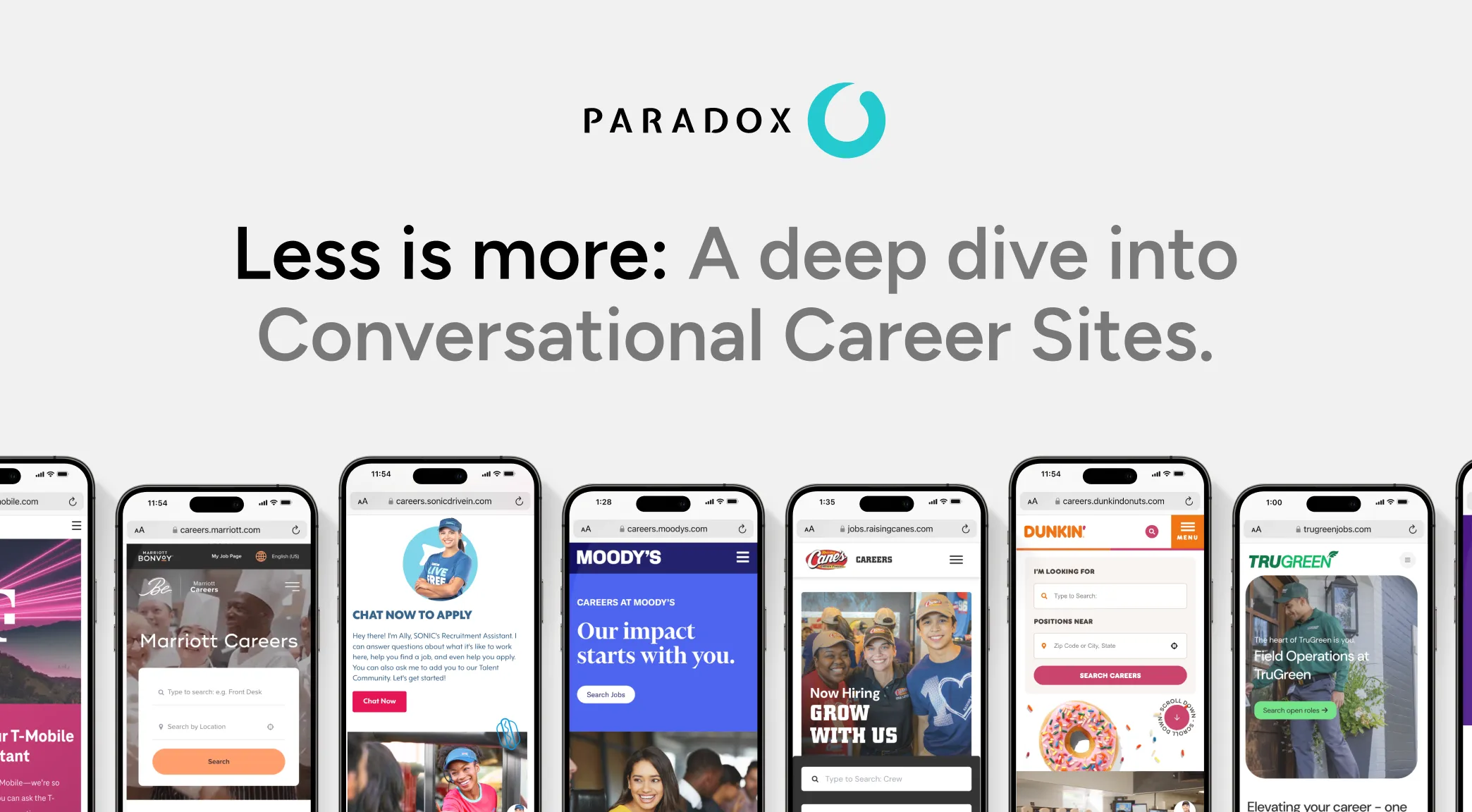

ChatGPT and Conversational AI: How conversations will change the way we work.

Discover a beginner's guide to ChatGPT and how to find the keys to maximizing conversational software in the right places.

Conversational AI has evolved. What's next?

ChatGPT. Bard. Bing. Conversational AI has gone mainstream — billions are being thrown around by tech giants to keep pace in the AI gold rush while the rest of us try to make sense of what it means, now and in the future. Is it good? Is it bad? The honest answer: it’s complicated. The value of conversational AI to automate certain tasks is more apparent than ever … but so are the inherent risk factors. The key to maximizing conversational AI in the talent and human resources spaces will not just be about using it for certain tasks, but ensuring we use it for the right ones.

Watch the webinar to get answers on:

- What even is conversational AI? What about large language models? Or generative AI? Yeah, we'll answer that and then some.

- So what? We'll breakdown what's fact or fiction, hype or reality, and ultimately what actually matters to people who hire people.

- Am I being replaced? No, you're not. We'll explain why conversational AI isn't a replacement, it's a copilot.

Meet the speakers.

A 10 year veteran in the HR tech space, Adam was previously chief technology and product innovation officer at Cielo, a recruitment process outsourcing (RPO) firm. Now at Paradox leading a global product team, his task is simple: build the world's leading conversational AI platform for talent.

A 10 year veteran in the HR tech space, Adam was previously chief technology and product innovation officer at Cielo, a recruitment process outsourcing (RPO) firm. Now at Paradox leading a global product team, his task is simple: build the world's leading conversational AI platform for talent.

Josh has led talent acquisitions teams for some of the world’s largest brands, including McDonald’s and Abercrombie and Fitch — designing people programs and experiences that helped those brands fundamentally transform hiring. As VP of marketing and advocacy at Paradox, Josh collaborates with global employers to champion their transformation efforts.

After serving as the CIO of Amgen for six years, Diana McKenzie joined Workday as its first ever Global Chief Information Officer in 2016. A thought leader and frequent speaker on digital transformation and diversity she co-founded the Silicon Valley Women’s CIO Network in 2017.

Watch the on-demand webinar:

Adam Godson (00:04):

All right. Well we've got a good crowd here as we get started at the top of the hour, so I don't want to delay us any further. We've got lots of exciting things to talk about. First of all, I'll say thanks to you for joining. I know you all have a busy day and lots of things to do. So thanks for spending a little time with us to talk about one of the topics that has just thrilled our teams and thrilled the tech community — about large language models and ChatGPT and generative AI. We're gonna clarify lots of those terms and more as we go. And to do that I have a couple of special guests, and these are guests that are highly curated as I thought about the people I would most want to have a conversation with about this topic. These two folks came to mind. So I will let them do a couple of quick intros, but we'll start with Diana McKenzie, former CIO of Workday.

Diana McKenzie (01:00):

Thanks Adam, and great to be here with you and Josh. Great to be here with all of you on the call. So I'll start with the fact that I am delighted to be a board member of Paradox, and that is building on three and a half years where I served as the CIO at Workday — the first CIO at Workday. Prior to that, I spent about 33 years in life sciences and served in the role of CIO in my last life science job at Amgen. I find this to be a fascinating topic and very much looking forward to the conversation today. So thanks again.

Adam Godson (01:36):

Yeah, Diana, thanks so much for joining us. And we just so appreciate your technology expertise at organizations, large and innovative and in all things. And so, thanks so much for being with us. And thanks also to Josh Secrest. I will let Josh — you make your own introduction — but thanks to you, as well.

Joshua Secrest (01:52):

Yeah, hi all. Adam, I can't wait to pick your brain. Diana, same. This is gonna be a good conversation. I'm our Vice President of Marketing and Client Advocacy at Paradox. And prior to joining Paradox, was the head of global talent acquisition at McDonald's. Also was able to lead global talent strategy there. So in that role, I was able to oversee a corporate recruiting team doing global corporate TA work, as well as kind of consulting to our restaurants around the world. So that's over 37,000 restaurants and making a couple million hires per year. So got to kind of see the high volume side and the recruiting side. And then prior to that spent a good chunk of my career at Abercrombie and Fitch, where I led the global TA team and some of the talent functions out there.

Adam Godson (02:41):

Fantastic. Josh, thanks so much for joining us. Your experience is second to none, and I always love hearing your thoughts on complex initiatives. And I'll do an intro for myself as well. I'm Adam Godson. I'm Chief Product Officer here at Paradox. And so my job is to figure out what Olivia should do and then make that happen. And I'm a TA technology professional; been in this space about 20 years, and it's all I know, it's all I think about <laugh> and so I'm especially excited when it comes to the application of new technology for talent acquisition. And thanks to all of you for joining us and for spending some time. We're gonna get started. We want very much for this to be a conversation, of course, among the experts we have gathered here today, but also with you.

(03:29):

And so please do use the chat function, and we will leave large sections for Q and A throughout and be sure that we take your questions and get them introduced into the conversation here which we very much look forward to having. And so, with those intros I'm actually gonna start a little bit with some framing. And one of the things that I know we talked some about is: words. The definition of some of the words in this space that are new and becoming somewhat interchangeable. So we framed this around ChatGPT and generative large language models. And so I wanna talk about what some of those terms mean. And so a few of them that are important:

(04:16):

The very first one is generative AI. And so generative AI — I think is the most explanatory in its name, in that it literally generates something that didn't exist before. And generative AI can have different terms — or different mediums, I should say that has output. So you can generate text, you can with something like, like GPT. So you can have — say, "Write me a poem about my high school graduation." And it will write you a poem about high school graduation. You can also do that for images with services like DALL-E 2. My kids love this. "Paint Me a Monet about stormtroopers going grocery shopping." And there we have it and also with video.

(05:05):

So make me a video that has maybe some different criteria. And those are based on large language models, which is another term for the underlying technology. So when we talk about GPT-3 or GPT-4, and you hear those terms — LlaMA, BERT, others — are really large language models on which those are based. And so that is sort of the statistical of having billions of parameters of words that are related to one another. And then last one I wanna talk about is conversational AI. And sort of the spark moment that ChatGPT has created. And so conversational AI has existed for some time. And so conversational AI being defined as a conversation between a person and one or more machines. And that conversational AI has existed in lots of different ways.

(06:02):

But the real spark that really happened recently with ChatGPT was the combination of conversational AI and large language models. And we'll talk a little bit about that as we go, which has created this moment for us and all the investment in AI. And so I wanna talk to our experts a little bit then about — you know — how, why this matters, and what's changing in our world as we go forward with some of the new paradigm going forward. Maybe Josh, I'll start with you. As a talent leader, why does this matter to you? Why is this important?

Joshua Secrest (06:39):

I just get really excited about it. I think for our teams, regardless if you're thinking about <inaudible> or you're thinking about corporate hiring, it's all about how do you use these talents of our recruiters in the best ways. How do we use their brain power? How do we use their negotiating skills? How do we use their influence in the best ways? And I think what we've been able to see with conversational AI is this ability to start to have almost an assistant that's getting work done for you. And I think that's been really exciting.

(07:19):

Some of this generative AI, it feels like that can almost be magnified. The types of complex tasks that my recruiting team could potentially do, even if they don't use it all the way to fruition. But I think in the space, probably the most common things that we're all talking about right now is: job descriptions or boolean searches or the ability to craft interview questions, the ability to craft an email for outreach. It is to be able to supplement what our recruiters do, and I think gives them back more time to do some of those unique things that ultimately they were hired to do. That I think a computer can't do. And so it makes me really excited to be able to arm our teams with that type of technology and see how they're gonna be able to innovate.

(08:16):

And who doesn't like enabling your team to be able to play a little bit? Right now we're at this stage where it doesn't feel like anybody's behind quite yet. It's just this ability to be able to play and to test and to ask questions and to be curious. And frankly, I think the learnings you can have with some of this technology that's out there — you can probably almost have just as many epiphanies using it, and life outside of work that can then translate maybe into a really cool idea inside of work. And so maybe the piece really exciting there is that amazing kind of crossover of sparks or creativity that can be happening outside, that then can maybe yield to something transformative for our TA or recruiting teams.

Adam Godson (09:10):

Yeah, Josh, I love that — the feeling that there's some freedom in knowing that folks that are using this technology are ahead and have a chance to get ahead. No one's behind yet in that way. And so there's a freedom in being able to be experimental to get those gains. I really love that. Diana, maybe I'll ask you the same question. You can choose either in life or in business — why does this matter?

Diana McKenzie (09:36):

Yeah, I think it's really exciting and I also think that it fosters the curiosity that we all have to better understand how other people have used it, and then think about how you can apply it on your own, whether it's in your personal life or your professional life. So I'm actually gonna share just a real quick example across the continuum. So on the personal side, my husband and I have had scuba diving in Iceland on a bucket list ever since Covid hit. And we kept putting the trip off, and we've looked for places that have great itineraries, but they all seem kind of stayed to us. So I thought, “Let's ask ChatGPT.” And I asked ChatGPT to give me an itinerary of a scuba diving and above ground sightseeing trip when the Northern lights were happening.

(10:25):

And I got a six day itinerary of every single place we needed to go and where we would stay. There weren't enough scuba dive options in there, so I said, “Can you add some more scuba options?” And it came back with an eight day itinerary and four more dives. And then I said, “Can you generate a pack list for me?” And it generated an eight bullet point pack list that even told me what kind of charger I needed to take in order to adapt to the power supply. So I was delighted. And I think the beauty in that is, I will still go to a travel agent. I said, “Tell me the top four travel agents who can help me convert this itinerary to reality.” And it gave me four options, but I'm gonna be so much more well prepared with better requirements when I go to that person, and it's gonna make it easier and more fun for them to do their job. And I think when we translate that into the work environment, it's exactly what Josh said. We have people that have tedium in their jobs, that's sometimes a very large portion of what they do. And as professionals and leaders, our goal is to try to figure out how to remove the tedium and move them to the higher value task where they find more joy and more fulfillment. I think ChatGPT is making that possible in the work environment.

Adam Godson (11:34):

Yeah, I love that. I love that. Because I think there's always a little fear when it comes to new technologies about, “Will this replace my job or part of my job in that way?” And I think my perspective on that certainly is that right away, that's not the case. But certainly people using new technologies will be superpowered and be able to get more work done. And I think they also let people think about a higher order of things they want to be doing. I've known a lot of recruiters in my life, and many of them are just, frankly, not great writers, <laugh>. And they don't enjoy it. They don't wanna be doing it. And so they can craft an email to a candidate when they need to. But having someone else to do some of the writing. They love to talk to candidates, and so whatever can get them talking to more people makes them happy. And so I think moving people towards what makes them happy in their highest best use is really impactful there.

Joshua Secrest (12:30):

Adam, maybe from a practitioner side — I mean, we're all kind of digesting so much of this so fast, and we were just starting to feel like we were getting the hang of ChatGPT-3.5, and then four comes out and we're reading that it's a hundred times better? Can you help explain maybe to those of us less on the tech side? I mean, how did it get so much better so fast and what are some of those differences that we should be thinking about?

Adam Godson (13:06):

Yeah, it's interesting, like the world here is moving very fast, but also you have to realize that the world here has been spinning for some time. And so ChatGPT-3 as a model was introduced almost two years ago. And so our team at Paradox, and many others, have been playing with just a large language model generally. And the spark moment came in December when they released ChatGPT by making it conversational. And so that was when the interface changed. That's when they've got a million users in five days. But that was all built on GPT-3, which had been out for almost two years at the time. And so it shows you how much the interface really matters. But because this — the transformer technology — has all built on, it's been around for some time. Large corporations, OpenAI, Facebook, Microsoft, Google, others have been working on their own large language models for years.

(14:07):

And what's happened now with the explosion is the economic and sort of peer pressure to get yours out the door, <laugh>. And so Facebook has been working on LLaMA for some time, and Google has been working on Bard. But nobody wants to be behind and lose a first-mover advantage. So while it seems like these things are coming out every two weeks, I assure you they did not take two weeks to build. They've been in process for several years and are just being accelerated to market, which has its pros and cons as well. But, but either way the world here is moving incredibly fast. Every day there’s news to wake up to, to think about — how this might be used in the future. And so, Josh, maybe I'll ask you a little bit about some of the game changer ideas here that salivate in your mind. You mentioned a couple earlier as you're talking about that. What are some things that excite you about this as a talent leader, thinking about how the future with generative AI?

Joshua Secrest (17:05):

I think it's really easy to sometimes think about ChatGPT and how much it's gonna transform the world of corporate offices. And it's gonna be really interesting, the iterations that are gonna happen there. But I also think about that manager, and how this can potentially help them quickly create a job description they need to put out. So as soon as they get the resignation, they can get someone staffed and have sort of no impact — or minimal impact to maybe one of their guests. So really excited about some of those pretty quick and easy ways to help frontline work on the recruiting side. It really goes back to this — we have talent recruiters who spend 50 to 60% of their time off and on more of this administrative work.

(17:51):

So for them to be able to particularly go to manager meetings and bring these really impressive kickoff preps to any time there's an open job — I mean, think of the possibilities that we have there now. They can do pretty much full research on the candidates in the marketplace. The supply and demand that's going on there can actually have the potential to brainstorm some of the different tactics that can be used and where to be able to post the jobs, be able to arm their hiring manager with a lot more information. So I think even within the next couple months, how people are gonna infuse this into their ways of working are really neat. And I don't think — and I know we'll get into this — but I don't think a lot of these idea starters or abilities to just kind of start some writing are too concerning. I think it's a way to be able to jumpstart ideas pretty quickly, without maybe using a hundred percent of what ChatGPT's writing going out as like your communications, for example.

Adam Godson (19:03):

Yeah, I love it as a brainstorming tool. Something to get some of the first juices going on that, which is great. Diana, how about you? What excites you for use cases?

Diana McKenzie (19:18):

Yeah, so I have sort of two different ways of thinking about this, and I'll start with the use case that fits the audience around the way in which we wanna manage, retain, and engage our talent within our companies. One challenge that has been elusive to every company — every CIO, quite frankly, at HR organizations, corporate communications groups — is how do you make it possible for all of the employees in your company? Recognizing you have so many different personas, you have people who are in the field, potentially, you have hourly workers, on the manufacturing floor. How do you make it possible for them all to get access to content that's really engaging for them about what's happening in the company and what's relevant to them? And I think historically, and even today, the tools have really lagged behind in that space.

(20:05):

But if we think about the power of something like ChatGPT-4 and what lives underneath that, and match that to Josh's point about personalization. If I happen to be a customer service agent and I'm sitting in Chicago, but the company's headquartered in the Silicon Valley, how do you get content to me in a way that it is meant for me and it speaks to me, in the place that I'm at, with things that are happening in my community? I think there's tremendous potential down the road for us to solve a big problem that's been out there for companies for a while. I'd launched to another frame that I use a lot, because right now where I spend a vast majority of my time is in healthcare. I serve on a couple of healthcare boards. And artificial intelligence is something that healthcare companies and life sciences companies have really been working with for many, many years.

(20:54):

But the notion of taking something like what we're seeing now with ChatGPT-3.5 and four, and giving physicians — back to that, “How do I take the friction out of my environment? How do we get more time from the physician when we're actually in the examination room with them and make it possible for them to generate their translation of the conversation they've had with me and or send me the notes that I need to have when I go home to follow up on everything they've said I needed to do?” So it's really great to see the personalization being built in, and companies taking advantage of this now to help physicians take some of those more tedious, time-consuming tasks out of their way so that they can spend more time interacting with their patients. I think it's tremendously exciting.

Adam Godson (21:43):

Yeah, I love that. I love that there's lots of great examples like that. I think one of the things that I like most about conversational AI is that it requires no training. That's the hypothesis: that by its very nature, it is natural and conversational. And so it's always been a tricky point rolling out software. How do I train all my users? How do I have all these sessions to train people how to use it? And in the future, there's a way to have your users trained as they use the software. And so be able to ask questions, “How do I do this? What's the policy for that?” And be able to understand what they should be doing by asking questions as they have them. Rather than the expectation today, whereas users get trained, have to remember a bunch of things, and then have to get support along the way. So I think that's a really important paradigm shift there. And another—

Diana McKenzie (22:41):

Adam, can I just do a quick launch from that? So I think that also lends itself to — and we're seeing this — a bit of a renaissance in customer service today, right? So the fact that we can personalize that customer service, and — to your point — take everything we know about training and about challenges people have run into whenever using technology. And it doesn't just have to come from that company's internal knowledge base. It can come from a much broader set of content and information to improve that experience that our customers have with us. So…

Joshua Secrest (23:09):

And I think that's a really interesting piece and maybe a gap for me is — how do we go from where we are right now with ChatGPT, which seems like this really broad and so deep general knowledge base, to having something like this within our companies or within our enterprise, where I can ask it specific questions, and it dials up something maybe more specific to my organization? Is that right around the corner, is that already here? How's that start to look, Adam?

Adam Godson (23:45):

Yeah. Here and around the corner. So I think the early versions are here with some vector databases that can ingest content and then be able to have that content queried and then be able to pull back some answers from that. I think the point you get to though, Josh, is the idea of, “How does this get into my flow of work?” What I catch from a lot of people today is that they have ChatGPT open, maybe in a web browser tab, and they will paste things into it throughout the day as they think of it. And that's simply because we're early. And so the next phase is thinking about how this gets to be in the flow of the things that I do every day.

(24:32):

And I think that's probably the most important point about this technology, as opposed to, if you can think of other things that have had the potential to make big changes in the way we work and haven't. Voice is one that jumps to mind for me — about voice technologies. Where it probably just hasn't taken off like you thought it might. I think the Alexa study was that users discover all the features they will ever use in the first 20 minutes of owning an Alexa device. And they really use it as a timer <laugh> and to play music. And so it hasn't really transformed, and that's because it's not been in the flow of what people do on a day-to-day basis.

(25:17):

It requires you to virtually say out loud, “Hey, Alexa,” or, “Hey, Google,” and to do something you wouldn't normally be doing. And that's actually why conversational AI has been so effective in recruiting for Paradox, to be honest. It’s because we're in the flow of where people are, which is mostly SMS. And so being sure that we can be sending text messages to people and having them respond in natural language, and being able to be in their flow of natural communication. So I think that natural element to how we design it, first for candidates, but then also then for all of the recruiters and managers that use the software in the flow of work to execute work with other systems. And all those integrations and all the messy stuff will still be tremendously important to work with other tools. So while it is very magical on the language, there's still a lot of hard work to do to make it get things done.

(26:18):

Maybe I'll transition a little bit into Diana thinking about your experience as a CIO. Tell me what are you thinking about as new technology comes to you and you have to think about how to manage this in an organization. What are some of the downsides you think about? What are some things that maybe you'd be concerned about, and that our listeners might be thinking about as they interact with their own CIO — or are a CIO?

Diana McKenzie (26:46):

Yeah. No, it's a great question. I think there's — as we've said — there's tremendous promise here, but there's also a need to be tremendously cautious. And especially if you happen to be inside a highly regulated company, highly regulated industry, because there's as much that this technology can do that's not for good, as it can for good. And, one of the conversations that Adam, Josh, and I have had is about AI ethics. And oftentimes when we hear that phrase “AI ethics,” you have this general feeling of it's “squishy,” it's really hard to put your arms around it. How do I apply that when I've got a proof of concept staring at me that has a really good use case?

(27:37):

And I'd shared that I'd had the opportunity to hear Dr. Reed Blackman present on a book that he wrote called Ethical Machines, that I thought actually gave a pretty decent structure for how we think about this. And it's not really just the CIO — it would be the CIO, it would be the business leader with the really great use case. And especially if you're in a regulated company, I would say do not not do it right, but be thoughtful about your experimentation and start small. Make sure you have a really good team. Have somebody in there that really understands these models. Somebody who understands your regulatory environment, somebody who understands the importance of keeping the data safe. Someone who can think about bias. So cross-functional, almost like a scrum team, but maybe of a different makeup, if you will.

(28:27):

And the thought process that you can give to whatever it is you're trying to do the proof of concept with is, “Is this AI for good?” So are we trying to create something completely new that has really very little downside. Or “Is this AI for not bad?” Which is, it's going to have some sort of selection element to it. So we wanna make sure that it's not biased. We wanna make sure that we're following all of our privacy laws as we bring the data together — whether that's our data and then an API to one of these underlying large language models. Are we thinking about how we protect that data? So as we think about the different component parts of ensuring that whatever model we build, it is a model that we can explain. So when someone asks, “Well, how did you get the result?” You are able to provide a pretty transparent, scientifically-based method for how you built the model and how you tested the model. And I think that needs to be: the more rigorous, the more selective the model becomes and the more regulated you are in that environment. And I think overall it's really a pretty common sense approach that we take whenever we bring a new technology in. It's just this one in particular…”Use a little bit more caution.” is what I would say, if you're the CIO and you're thinking about doing this with your team for the first time.

Joshua Secrest (29:50):

Are there any broad strokes either of you might be able to recommend in terms of CIO’s — maybe chief legal officers, chief people officers — in terms of general guidance? Because I think you're right, Diana. We want our teams to play. There is a huge benefit to them feeling comfortable and playing within the confines of work, but there needs to be some caution here. What would be the guidance or the guardrails right now? We've got a couple questions already in chat, Adam, on: confidentiality. “What should I upload? Maybe, what shouldn't I upload?” Anything you can provide there.

Adam Godson (30:35):

Yeah, it depends a bit on sort of if it's being used through an API, through a third party provider, or if it's in the ChatGPT interface. I think in the terms of service, of course, those are gonna govern that in ChatGPT, that data's all used for training. And so I would be careful about doing things like taking personal data from an applicant and posting their resume into ChatGPT and saying, “Is this resume better than that resume?” Data privacy-wise, that's probably not a great move. But on the contrary, if you took someone's LinkedIn profile, which is public, that is more in the realm of fair game. So I think there's some nuances that are on data privacy. And I think the second, to be cautious about is: asking ChatGPT or any large language model to make decisions.

(31:25):

And certainly there's a whole — there's another webinar we'll do about the regulatory world around that. But saying things like, “Is this candidate better for this job than this other candidate?” Or things like that. I think, when you boil down recruiting sort of all the way to its essence, it's really about communication, decisions, and a bunch of administrative stuff. I think our position is we'd love to use technology to automate the communication and really focus a lot on the new things that are available there. Definitely work a lot on the administrative work, but let's continue to leave the decisions to people at this point. So I think there's just a couple areas that I would say can be hot buttons.

(32:11):

I love that we are getting some great questions. These are really, really, really fantastic. But we'll keep diving into some of those. Josh, how about you from a change management perspective maybe, or from a “challenges incorporating new technology as a talent leader?” How do you think about that?

Joshua Secrest (32:31):

Yeah, for this one in particular, I think it is the partnership, kind of across the aisle. I'd want to make sure that I'm pretty close to my DEI leaders right now. I'm pretty close to my legal team right now and getting some of this guidance. I'm really close to my CIO. I think just us all being aligned is gonna be key because I think there'll be a lot of shiny objects — especially in the next zero, or I guess negative three months, to next couple years — that I think we're gonna get really excited about. But just kind of making sure that we know how we're piloting, how we're testing. I think separately, it's where TA, HR, and just like in general “the world of work” start to really merge together.

(33:17):

And so I'd want to have a plan that's starting to map out probably with our COO, in terms of does this open any doors for us to build some new things internally? Does it open up some really interesting roles for us to pilot and test maybe under the umbrella of our CIO or CTO, some different things so that we can be on the forefront of what guidance or what innovations we could be coming up with? How should we be vetting some new vendors that are potentially coming out with some really interesting things? Where should we be pushing some of our current vendors? So yeah, I mean, kind of across the board, in lockstep, in terms of how do we give the freedom to play and innovate sort of, especially outside of work. And then some confines, or at least like “areas of caution” inside of work. But I think that alignment across the top would be really helpful.

Adam Godson (34:12):

Yeah, I think that's important to think about, like some governance of how we think about these tools. I imagine lots of new policies are being written — maybe by large language models themselves — about how to govern in organizations. Because, yeah, we got—Yeah what do you got Diana?

Diana McKenzie (34:28):

Adam, I was just gonna say, I think this is one of the lessons we can learn from history. As new technologies have hit this hype cycle, there can be a tendency to try to build this overarching, what-can-become-a-very bureaucratic structure that will slow you down. And I think what we've learned from all the lean startup principles is: if we start small and we start with a use case that's compelling, and we start with a use case that doesn't have the potential to do great deal of harm, we can learn from that. And the lessons we learned from that one iteration can help us over time decide what that ultimate structure needs to be. But you almost have to learn a little bit before you could say, “I'm gonna put this major, generational AI structure in place for my company.” So I would just caution: no one is behind. It's very early. We're still learning a lot. But I would caution waiting until you put this big structure in place, because you may find that by the time you get that in place, you are behind.

Adam Godson (35:30):

Yeah. The counter movements are always interesting. We saw Italy, I think last week, banned some of the technology and some open letters to slow down <laugh> apart from the tech community. And so it's interesting to have some of those counter reactions. But I think the logical progression approach is one that is really valuable to think about. And so I imagine that there are lots of companies that are thinking about the problem of hallucinations — and a “hallucination,” for the group, is something that a large language model would say with great confidence that isn't true, or is something that it wouldn't have been trained to say. And it’s something that can happen and is one of the dangers.

(36:17):

And so I know there are many, many, many lawyers in the world that are freaking out <laugh> and thinking about all the liability that could exist in the world about “untrue things said with great confidence,” and then thinking about who might be liable. And so I think you're right, Diana, it's not that we have to jump all the way to the end of this, but what are some things that we can do in quick progression? For us, we've been playing with OpenAI tools for years, and one of the first things we built was a job description generator. So give it a job title and be able to have it generate a good job description that would have good SEO and <laugh> people will be able to find.

(37:00):

But a human has to approve it. And so the risk on that is incredibly low, to be able to do that. Because a human can edit that. And several others have mentioned in the chat that they use it as an idea starter to think about an email and then edit that. And those are really low risk ideas. When it comes to the “true generation” is where peoples’ hearts will pump <laugh> when you think about any AI saying things on behalf of a company that are being cited for the first time. And so there are some that will be excited to jump in with both feet. There are some that have really big legal teams that will say, Heck no, never.” And so we just have to take a nice logical progression about how that looks in the future.

Joshua Secrest (37:47):

Adam, can you go in a little about data? I think we use the term large language model. I think our brains kind of quickly go to, “Wow, this is leveraging so much data.” There’s things you've kind of explained to me before on using this as a tool to actually do some really sophisticated analytics, maybe within your organization or within your recruiting process. Can you go into why those are maybe slightly adjacent worlds and how we can approach that?

Adam Godson (38:23):

Sure. And that world is changing fast as well, as we think about new opportunities for plugins and other things we can jump to. But for example, large language models — the GPT-3, GPT-3.5 — are not particularly good at math. So if you ask them to do a five-digit multiplication problem, it will often get the wrong answer. Because it’s trained about words, not numbers. As time goes on, and GPT-4 is actually much better. It can do math in an improved way, but it gives fairly obvious data analysis <laugh>. So if you give it a table of numbers and say, “Tell me something interesting about this.” Or “Tell me about the trends here.” You'll get fairly benign things like, “This went up,” or “This went down.” But not the kind of analysis you'd actually have, like from a data analyst, to use. And so I think there's some to be desired there, as we think about the future and getting some of those insights. But I expect that to continue to improve, as we plug in with other services that are built for some of those different applications. We expect that to continue to evolve.

Joshua Secrest (39:46):

And then maybe one other on top is: languages and just translating. Is this, better than Google Translate? Will it be better than…? What does that look like from a language perspective?

Adam Godson (40:02):

Sure. Today, I would say it's not quite there. Google Translate has had a large team working on it for a very, very long time. But the ability to use translation does exist in the newer models. And so there's lots of promise there. As languages are complex things. But most of it is based in English today, which isn't it itself a bias, actually? <laugh> As we think about how things operate in the world. But I expect that to come along quite quickly as well. But it's not something that shouldn't be said, to think about other long-tail languages that aren't the top 10 most popular in the world, how that may impact their productivity growth over time.

Diana McKenzie (40:52):

Well, and then there are different ways of thinking about language as well, and that is, if I am visually impaired or I'm hearing impaired, there are some aspects that are already there that could help us do a better job of reaching our employees through tools like this.

Adam Godson (41:08):

Well, in that case, exactly. I think the convergence now of voice in that possibility is really exciting as well — where I think the interface for many things will become both chat and voice. And those two things will become interchangeable as voice-to-text and text-to-voice improve exponentially as well, which is also happening. And I'm actually really excited about that. It'll open up a whole new avenue for individuals with disabilities.

(41:41):

Maybe a couple of questions, as we think about our group. Josh, will ask this one to you. Where do you see the future intersection of the skills- and capabilities-based HR approach and ChatGPT and large language models?

Joshua Secrest (41:59):

Yeah, a lot of this comes back to the personalization component that I think is really exciting. I think, for that skills model, one of the best use cases for it is some of this internal mobility and internal movement. “How can I get trained and find the right place that's right for my career?” is the next step. “So that my employer then maybe doesn't have to go to the outside. They're gonna continue to invest in me.” I think there's been some really great progress in that space, but I think how amazing that this is probably gonna be able to tailor personalized development paths, probably connect me to both general information as well as specific information within my company, in a lot faster way than I've probably been able to have before, allowing me to upskill, allowing for me to like easily document those new skills that I have (I would assume).

(42:55):

Which I think is really great, because I think sometimes the snags in internal mobility platforms is sometimes the manual nature of having to go in and fill stuff out. And I think I'm excited by both the generative aspects of something that's personalized to me that then can generate — use the newest, hottest, coolest — things, to be able to educate me or connect me with the best external learning. But then I'm also excited about a future where that skills-based model is like, “Just write.” And like a conversational interface where I can ask it questions or get guidance without logins to seven different systems, without me having to fill out forms, just easily surface information that will benefit me, that'll feel confidential, that'll also really help me in a career. Like at this point we're talking about, “What would the best career coach do for you?” Well, they'd advocate for you aggressively and loudly. They'd hook you up with the right content and the right skills to make sure that you're eligible for that next job. You're starting to get to these places where it's really neat to have an assistant or this co-pilot concept, but I think you're also potentially getting to a place where you're getting great mentorship and great coaching along the way, that could be really cool for this skills-based model.

Adam Godson (44:25):

Yeah. Love it. Love it. Diana, I'm gonna ask you about maybe some of the downsides of this. So I think some that have surfaced are: the idea of people making fake candidates or a GPT-generated responses to job applications. I think assessments are one that can be vulnerable if they require text-based answers. In that way, I think large language models have “passed the bar exam” and are AWS cloud-certified, and lot lots of other entrance exams. Tell me about some things that this opens the door for that people might keep their eye on.

Diana McKenzie (45:07):

Yeah, I think if we go back to those principles of: bias, data privacy, and explainability. If we think about any model that we either develop internal to our own companies or a model that's part of a product that we acquire and use. Having a team that's very clear on those different aspects of whatever it is the model is trying to accomplish can be very helpful. It is, I think, the aspect of bias is really all based on the data set itself. So if there's not appropriate representation of all of the different demographics that you wanna have in that base model, then your model's likely going to be biased. So even just starting with: what data do you have? What data are you using? And then, what data are you leveraging, and are you confident that whatever comes out on the other end? — To a point that you made, Adam, is giving you a set of information against which a human ultimately applies judgment and makes a final decision is a great way to separate the two components of this, from a downside's perspective.

(46:17):

I also know and am supportive of some companies that have just blocked this capability on their company portal completely. And these are highly-regulated companies. They handle a lot of our personal information, whether it's on an insurance or a medical side of things. And I don't think that's bad. I mean, I think, if over time there's an opportunity to maybe build another layer on top of ChatGPT, and that layer says, “I'm smart enough to filter out: that looks like personal information, that looks like IP, right? That looks like something that you shouldn't be putting in the model.” Blocks that. And then you can take advantage of a model like this, right? I think there are a variety of ways for companies as they start to move in the direction of taking advantage of this to protect themselves. Notwithstanding, the fact that many companies train all of their employees on the importance of protecting the data and the privacy of their colleagues, which is a good place to start. So just a couple of thoughts.

Adam Godson (47:17):

Yeah, those are great. And I think that goes to the maturity of this, and the progression is, eventually this will start to get built into the tools we already use, but with governance layers that help keep companies safe and compliant on that. So, an example for us is being able to understand the text someone enters into Olivia and understand what to do with it. And then if it's a question, then we can have that answered. If it's someone wanting to enter data or reschedule an interview, we can react to that differently. So I think being able to have those application layers and use the APIs for large language models, rather than those interfaces that they've built, that's great opportunity to merge compliance with the productivity.

Joshua Secrest (48:06):

Adam, I'm not sure how much you're able to share, but as you think about your product team and the roadmap for the next couple years, how much are you trying to work with ChatGPT and generative AI and kind of weave that in? Versus, hey, what are all the things that our clients might need that are supplemental to this thing that's kind of really taking off?

Adam Godson (48:29):

Yeah, so we're actually tremendously lucky in many ways that we have been a conversational interface since our beginning. And so when the spark moment hit late last year of folks sort of understanding that the interface now exists with ChatGPT, that is the new interface. We're really well positioned for that. And so we will continue to use a variety of methods to do that. So there's sort of the narrow set AI that we have today, which really understands recruiting deeply. So there's not a single way you could want to schedule or reschedule an interview that we have not <laugh> detected in language so far. We've got a giant model built on seven years of doing this.

(49:18):

But also we're really excited about the generative future and do see that progression of building that into our product and lots of new things to be released this year related to that. But also sensitive to organizations for whom that's to leap too far. And so being able to give users the right control over what is generated and what is prescriptive in that way. So lots of new uses for the technology that we have. And we're really excited to have been working with it for several years and then to continue to build into our product.

Joshua Secrest (49:53):

It's really interesting for conversational AI — I mean, probably the expectations for all of us are now continuing to increase, right? We get to play with ChatGPT, which can iterate and can kind of remember previous parts of the conversation and loop that back in, and kind of keeps getting more sophisticated. We're seeing it more from our consumer end. What have you learned from coming to Paradox and building out the conversational moments for us: where we still need to have some guardrails when we're doing this for enterprises or when the conversational AI is really speaking on behalf of you as the organization?

Adam Godson (50:35):

Yeah, I think just understanding what the context is, and limiting to that context. So Paradox operates in a talent acquisition context, so there are a whole host of things that there's no reason for us to get into and have an answer for. And so we do not need to answer questions about someone's favorite music or any political event <laugh> of the past or present. And so being focused on a narrow set and then driving to get work done, that's what it's always been about. The parts that are really important — I think the part that's really, really exciting is we get to have richer, more personalized conversations, because of some of the new technology in with large language models. But it's also important that we stay in the context that we're trying to stay in and don't create any unnecessary risk.

Joshua Secrest (51:30):

That's helpful. That's great.

Adam Godson (51:35):

That is great. So maybe I'll ask sort of a general question for you and Diana, if I can. You're a talent acquisition practitioner and working at a larger company today — What guidance or advice do you have to take the next steps in using generative AI?

Diana McKenzie (51:55):

Some of the guidance, I think, you and Josh have already shared during this discussion, and that is where you have a base of data that can help you — help your team work more effectively and potentially in a more automated manner, where you're relatively confident the results going to be safe. I think you could apply that and there’re — you've already talked about — that building job descriptions, right? That is one way to do it. Writing offer letters, to pull different information together, and then obviously giving someone the opportunity to review the offer letter before it finally goes out. But anything that allows someone within the human resources or people organization to spend more of their time either with candidates or with the employees in the company, is gonna be a great place for them to start.

(52:44):

There are companies that have been in this space for a while, that have been investing machine learning and artificial intelligence, and they've all been getting vetted for many, many years. So working with companies that have a proven model and can answer all of these questions we've spoken about when you talk to them, about how much they can help you internally to your organization. And Paradox is a great example of one of those, where they have a lot of experience. And I've been focused on this for quite some time. I think if you have an opportunity to talk to companies that are just getting started — and I think this was Adam's caution in the beginning — there's a rush to get to the market with a product. I think it's a “ship it or zip it” is what I heard <laugh> on a podcast earlier this week. They probably don't have the experience. They probably can't back up what's behind the selection aspects of their model. And maybe you slow down and go with the trusted and true organizations that are out there, especially where you're working in a space and you're wanting to be very thoughtful about the people in your organization and treating them with respect.

Adam Godson (53:51):

Yeah, I love it. Love it. I actually had a direct question come in for you in the Q and A, Diana, which is: How safe or unsafe is ChatGPT compared to putting IP into Word or PowerPoint on the cloud or the company VPN? What guidance would you give there from a company security standpoint?

Diana McKenzie (54:10):

Yeah, I feel like Word and PowerPoint and even Google Docs — these companies have worked very, very hard to build privacy and security into their cloud versions. They wouldn't be where they are, they wouldn't have the number of people using their products, if they didn't have a really good story to tell on the privacy security backend of what they're doing. So I feel like that's been there for a while. I think when it comes to using ChatGPT, you're basically just adding data to a large base of data that has been taken from so many different places. You really don't know where all the data's come from and ideally you're not putting data into that model that shouldn't be out there. So I think it's a much higher level of caution on the ChatGPT side of things just because of how early we are. I don't lose any sleep at night when I use Office 365 or Google Docs at this point, personally, or then in companies — in both places as a CIO, where we use both of those products and feel pretty good about what's behind them.

Adam Godson (55:18):

Yeah. And I think that's all a lot of chapters yet to be written on how companies do that and what other terms of service involve and that, so early, early days.

Joshua Secrest (55:26):

This may be another one of those chapters, Adam. I'm not sure there's an answer to this, but it was an interesting topic in the chat, which is: what happens when like a ChatGPT-perfected candidate aligns with sort of the ChatGPT-employer. So, “Write me a resume that optimizes my chances of getting selected by x specific employer for y specific role, leveraging the information on how they write and evaluate candidates.” Like this is the first time I've seen that phrase from a recruiting perspective. I think it's really interesting, because we've seen it probably emerge most prevalently in the education space of someone writing a paper. And how do you kind of assess whether that was actually them or some other mechanism? Anything you've read on these topics and what will we continue to learn here?

Adam Godson (56:30):

I think the important part is that all sides are gonna continue to use the tools they have available to optimize their own individual chances. And so that's where the recruiters that are using this are gonna get themselves an edge for a while. And there'll be times where those things are both being used at the same time. And that's where I think, again, people ultimately make those decisions that make the final impact of those. And the rest is trying to get through other administrative things or get through gates, as they may. So it'll be really interesting. And I think there's other risks too, about: lots of really great written outreach messages can now be automated. And so I think I was having a debate the other day with someone about: if a perfect automated interview existed, would it matter? And my position was — I think the ultimate veracity of that interview is that someone cared to take the time to talk to you, not that the conversation was smooth and had good voice content. And so people actually taking their own time and caring can't be replaced by machines.

Joshua Secrest (57:39):

Really. Interesting.

Adam Godson (57:42):

Well, I think we're just about up and I wanna be respectful of everyone's time. I wanna say a huge thank you to the two of you. Thank you for your thoughts and for all of your insights. Thank you to all of you for joining. You will get some follow up from us of the recording, and digest, other things. And also don't hesitate to reach out on LinkedIn, email, however you can. As you can tell, we love the conversations, so please continue it with us. So thanks so much and have yourself a fantastic rest of your day.

Conversational AI has evolved. What's next?

ChatGPT. Bard. Bing. Conversational AI has gone mainstream — billions are being thrown around by tech giants to keep pace in the AI gold rush while the rest of us try to make sense of what it means, now and in the future. Is it good? Is it bad? The honest answer: it’s complicated. The value of conversational AI to automate certain tasks is more apparent than ever … but so are the inherent risk factors. The key to maximizing conversational AI in the talent and human resources spaces will not just be about using it for certain tasks, but ensuring we use it for the right ones.

Watch the webinar to get answers on:

- What even is conversational AI? What about large language models? Or generative AI? Yeah, we'll answer that and then some.

- So what? We'll breakdown what's fact or fiction, hype or reality, and ultimately what actually matters to people who hire people.

- Am I being replaced? No, you're not. We'll explain why conversational AI isn't a replacement, it's a copilot.

Meet the speakers.

A 10 year veteran in the HR tech space, Adam was previously chief technology and product innovation officer at Cielo, a recruitment process outsourcing (RPO) firm. Now at Paradox leading a global product team, his task is simple: build the world's leading conversational AI platform for talent.

A 10 year veteran in the HR tech space, Adam was previously chief technology and product innovation officer at Cielo, a recruitment process outsourcing (RPO) firm. Now at Paradox leading a global product team, his task is simple: build the world's leading conversational AI platform for talent.

Josh has led talent acquisitions teams for some of the world’s largest brands, including McDonald’s and Abercrombie and Fitch — designing people programs and experiences that helped those brands fundamentally transform hiring. As VP of marketing and advocacy at Paradox, Josh collaborates with global employers to champion their transformation efforts.

After serving as the CIO of Amgen for six years, Diana McKenzie joined Workday as its first ever Global Chief Information Officer in 2016. A thought leader and frequent speaker on digital transformation and diversity she co-founded the Silicon Valley Women’s CIO Network in 2017.

_compressed.png)