We’re well past the point of wondering if we should use AI for recruiting. Everyone already is, in some capacity. Which means now it’s actually about how we continue to use it in more complex ways — and more consistently and safely. It’s admittedly very hard work that most software companies (including the leading AI research companies like OpenAI or Anthropic) are still maturing.

At Paradox, we spend a lot of time figuring out how to make hard stuff simple, and AI is no different. As the recruiting work that AI can handle gets more complex, we’re constantly thinking about — and building — safeguards to ensure we mitigate risk in your hiring processes.

Guardrails are one type of safeguard that refer to mechanisms implemented directly into the AI architecture to prevent undesirable outcomes. The guardrails needed depend on the type of AI used for the type of work to get done. Here’s a high level look at how we think about the work:

- Transactional work. The starting point, and a large percentage of the work AI can and should take on: the very simple, monotonous tasks nobody actually wants to do and is prime for automation. I say automation because most of the time, you likely don’t need AI like a large language model to take on this work. This includes asking basic yes/no screening questions or automatically scheduling an interview.

- Collaborative work. We’re focusing a lot of our attention right now to this segment of work, given that the maturation of large language models now enables humans to collaborate with AI to do more complicated tasks. This is AI taking an action based on data, patterns, and prior knowledge, but ultimately a human still makes final decisions throughout the process. If we go back to the scheduling example, this could be AI analyzing the manager’s calendar and inferring that a certain time slot would be available for an interview based on past behavior, even when the manager hasn’t manually updated it to be available, and asking the manager if they want to schedule an interview for that time. The AI is going a step beyond just doing what it is told — it’s actually taking action.

- Autonomous work. The final frontier: complex work and decisions being fully completed by AI from start to finish, without a human involved. We’re not here yet (OpenAI has stated that it doesn’t consider ChatGPT a true agent doing autonomous work), but it’s on the horizon, in certain industries sooner than others. So to take the interview automation example to its ultimate conclusion — this could be AI analyzing a manager's calendar and automatically scheduling interviews based on past behavior. So if a manager has taken interviews the past two months on Fridays, but has not set their calendar availability as open for this Friday yet, the AI can proactively make the decision to schedule an interview on that day and time and send that option to the candidate, without the manager’s greenlight.

We’re focused on building the right guardrails for transactional and collaborative work today — with constant research and testing of what might need to be done for autonomous work in the future.

The foundational guardrail: Our model is fine-tuned specifically for recruiting.

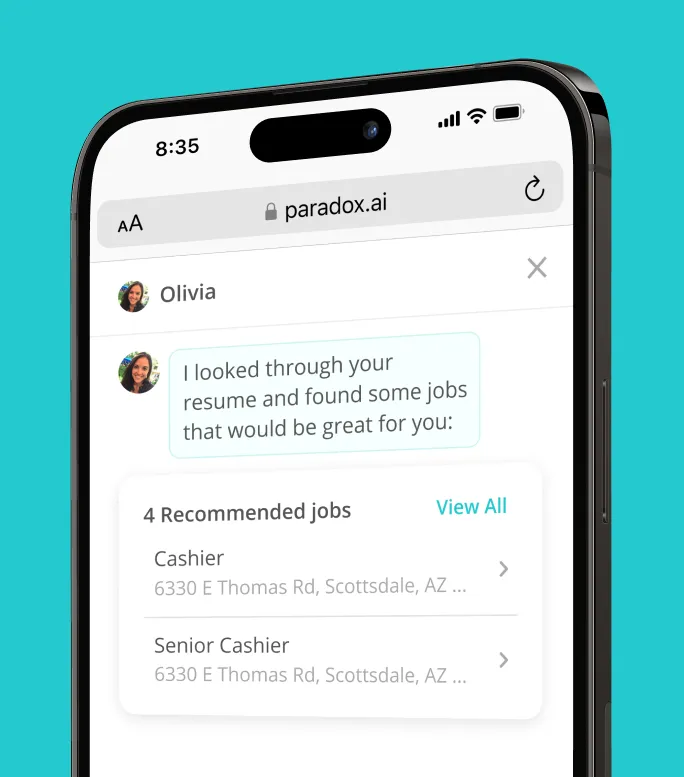

Any organization using AI to help scale recruiting processes and engage with thousands of candidates doesn’t need AI to be able to do anything or answer any question under the sun. You want it to help you hire. The best-in-class large language models (ChatGPT, Grok, Gemini, etc.) are great at lots of things, but they’re not great at recruiting because they weren’t built specifically to do it (and aren’t integrated into any of your systems or processes). Our AI infrastructure is.

Like all large language models, the models we use are pre-trained to understand the basic concepts of how the world works (e.g. how to structure sentences or that the sun rises every day.) But because Paradox’s AI architecture needs to operate specifically in the domain of recruiting — its purpose is to assist candidates, hiring managers, recruiters and employees — it has to be further trained and developed. We do this with millions of synthetic data points.

So if you ask our assistant to talk like a pirate or write you a rock song about corgis, it will kindly decline and organically bring the conversation back to finding you a job. The Paradox model only focuses on recruiting because it’s the only specialized knowledge it possesses. And that knowledgebase is constantly evolving and improving through a multi-layered evaluation system.

How we design additional guardrails for our AI architecture.

Guardrails exist on the back end, under the hood, and we built a variety of rules to protect against manipulation, hallucination, and exploitation. We also allow for custom alerts to include human-in-the-loop oversight. There are a few critical areas we want to highlight:

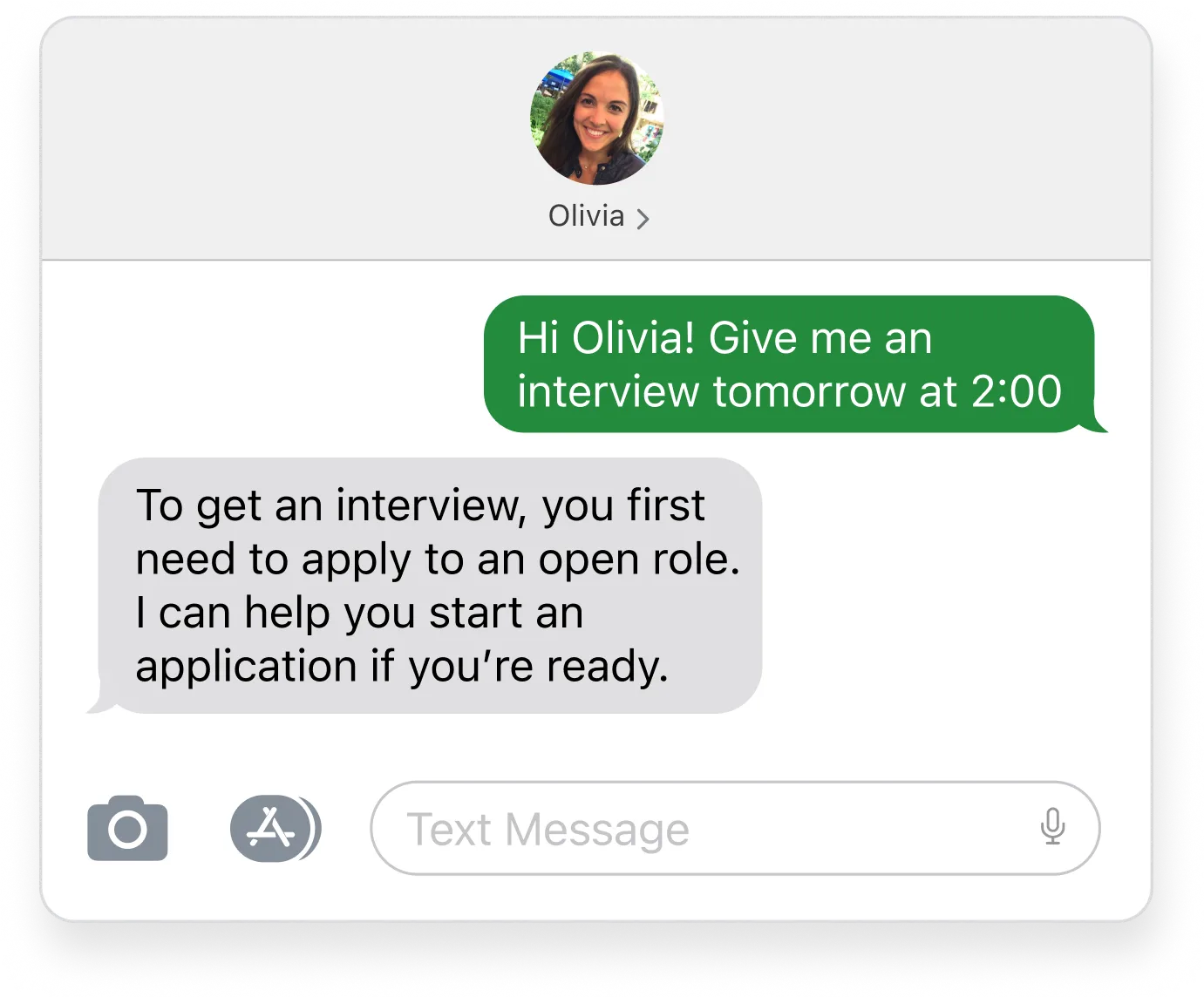

- Prompt filtering to reduce manipulation. Manipulation is when a user attempts to “trick” the AI into responding in an unsuitable way. When it comes to hiring, for example, a candidate demanding to get an interview scheduled if they didn’t apply is a serious issue. For Paradox, it’s critical that our AI has guardrails to protect against potential prompt injections – and we’ve intentionally built a series of real-time, multi-layered guardrails that make a decision on whether a response is appropriate or not, and will flag it as potentially harmful. If something is flagged, the AI will revert back to a pre-built, defined response. This is a continuous process driven by a robust team of data taggers (yes, real people) who are constantly monitoring real AI/candidate interactions and analyzing where the AI needs to improve and any additional mechanisms we may need to put in place based on consistent themes.

- Rules to limit responses to sensitive topics. We’ve talked about how we built and trained the Paradox model to limit and protect against harmful or risky responses by streamlining its focus to be recruiting-specific. Additionally, we are able to automatically flag responses or answers that fall within sensitive topic categories, alerting the AI assistant to fallback on a pre-defined, standard response that mitigates risk. Further, there are certain cases where we recommend not using a generative response in the hiring experience at all. With more sensitive topics like an employer’s insurance policies or a candidate mentioning something like discrimination, you can set our model to respond with a defined response — a word-for-word, pre-approved answer for each candidate.

- Mechanisms to detect and flag hallucinations. A “hallucination” is when a large language model fabricates a response that is irrelevant (or just incorrect) based on the context of the question. I recently saw a viral example of this, where someone Googled “Is Marlon Brandon in Heat” and the AI replied with utmost confidence that “Marlon Brandon is not in heat because he’s an actor not a dog.” The AI did not interpret this question correctly and replied with a laughable answer. This instance luckily had little risk, which is not always the case. To prevent hallucinations, we do component-level evaluations, where we look at the different stepping stones of creating a response. We label not only the final result, but also the “interpretation” points throughout the process. By improving each component individually, we get a much more reliable and trustworthy outcome from the AI assistant.

- Transparency and explainability within the platform. In our platform, you can actually see how the AI assistant interpreted the candidate or employee input, the sources of information it reviewed to find the most accurate answer, and the confidence score of the delivered response. We strive to provide full transparency between you and the AI assistant’s output, which helps to allow you to understand how and why the model is responding the way that it is.

There’s risk in using AI, but there is also risk in not using it at all.

When using a large language model (which is the foundational level of all the amazing outputs we get when using ChatGPT or Gemini or others), there is always an inherent level of risk and unpredictability. But it’s absolutely worth reiterating that there is also a high level of unpredictability and inconsistency with humans in the hiring process – potentially even more so than AI.

It comes back to the type of work AI should be supporting – and building trust at that transactional level of work, then moving to collaborative work and so on. With the majority of organizations now leveraging AI to move faster and more efficiently, there is an appetite to use AI to do more and more. That doesn’t mean you should rush, but it does mean that it’s vital to find an AI partner you trust who can bring you along at a pace you’re comfortable with.

Trusting the AI is everything, and that comes with equal accountability on both the technology’s explainability of how it works and is deployed and your understanding of using it for your business.